For Non-Idiotic Scientists, Intelligent Light Workers and All Humans With a Sincere Quest for True Knowledge and Rapid Spiritual Evolution

Georgi Alexandrov Stankov, June 1, 2017

This ebook is also available in two different PDF formats:

and here as PDF and ePub:

Dear Sir,

Modern physics is, to use a popular modern term, essentially “fake science” and so is mathematics since 1931 when the famous Austrian mathematician Kurt Goedel showed beyond any doubt with his famous incompleteness theorem (in Über formal unentscheidbare Sätze der “Principia Mathematica” und verwandter Systeme) that mathematics cannot prove its own validity as a hermeneutic discipline of abstract human thinking with its own means. Since then mathematics, and together with it all exact natural sciences that use mathematics as a tool to describe nature in terms of natural laws and mathematical models, exist in the famous Foundation Crisis of Mathematics and Science (Grundlagenkrise der Mathematik).

I hope you as a theoretician are well aware of this fact and consider it in your research. I say that because most scientists have swept this unpleasant truth with a huge broom under the carpet of total forgetfulness and live as innocent sinners in their self-afflicted illusion, called “physics” and “human science”.

Present-day physics is in big troubles as the standard model cannot explain most of the phenomena observed. It is unable to integrate gravitation with the other three fundamental forces and there is no theory of gravitation at all. This deficiency is well-known.

I made a survey on the main focus of research activities of ca. 1000 representative physicists worldwide as they presented themselves on their personal websites and found out that more than 60% of all physicists have dedicated their theoretical activities on improving or substituting the standard model which is still considered, out of inertia and lack of viable alternative solutions, to be the pinnacle of modern physics, incorporating classical quantum mechanics, QED and QCD with the theory of relativity, but not classical mechanics.

This is the most convincing proof that the standard model is “fake science” and that it must be substituted as it does not explain anything. It is very encouraging that the majority of physicists and scientists (theoreticians and mathematicians) understand and accept this stark and shocking fact.

When the Nobel Prize Committee awarded in 2015

Takaaki Kajita

Super-Kamiokande Collaboration

University of Tokyo, Kashiwa, Japan

and

Arthur B. McDonald

Sudbury Neutrino Observatory Collaboration

Queen’s University, Kingston, Canada

for their experimental work showing that neutrinos might have a mass, it had to admit in the press release that:

“The discovery led to the far-reaching conclusion that neutrinos, which for a long time were considered massless (?), must have some mass, however small.

For particle physics this was a historic discovery. Its Standard Model of the innermost workings of matter had been incredibly successful, having resisted all experimental challenges for more than twenty years. However, as it requires neutrinos to be massless, the new observations had clearly showed that the Standard Model cannot be the complete theory of the fundamental constituents of the universe.” (for more information read here)

Let me summarize some of the greatest blunders that have been made in physics so far and expose it as “fake science” only because physicists have not realized that their discipline is simply applied mathematics to the physical world and have not employed it appropriately to established axiomatic, formalistic standards. Therefore, before one can reform physics, one should apply rigidly and methodologically the principle of mathematical formalism as first introduced by Hilbert and led, through the famous Grundlagenstreit (Foundation dispute) between the two world wars in Europe, to Goedel’s irrefutable proof of the invalidity of mathematics and the acknowledgement of the Foundation Crisis of mathematics that simmered since the beginning of the 20th century after B. Russell presented his famous paradoxes (antinomies):

1) Neither photons, nor neutrinos are massless particles. Physicists have failed to understand epistemologically their own definition of mass, which is based on mathematics and is in fact “energy relationship“. All particles and systems of nature have energy and thus mass (for further information read here).

2) This eliminates the ridiculous concept of “dark matter” that accounts for 95% of the total mass in the universe according to the current standard model in cosmology which is another epitome of “fake science” as the recent dispute on the inflation hypothesis not being a real science has truly revealed (read here). The 95% missing matter is the mass of the photon space-time which is now considered to be “massless”. I have shown how one can calculate the mass of photons very easily and from there calculate the mass of matter beginning with the chemical elements (read here and see Table 1).

In this way one can easily integrate gravitation with the other three fundamental forces and explain for the first time the mechanism of gravitation by unifying classical mechanics with electromagnetism and quantum mechanics while eliminating the esoteric search for the hypothetical graviton, which is another epic blunder of physics (read here)

3) Charge does not exist. When the current definition of charge is written in the correct mathematical manner, which physicists have failed to do for almost four centuries (actually since Antiquity) since electricity is known, it can be easily shown that charge is a synonym, a pleonasm for “geometric area” and the SI unit 1 coulomb is equivalent to 1 square meter. Unforgivable flaw!

Read here: The Greatest Blunder of Science: „Electric Charge“ is a Synonym for „Geometric Area“

And I can go on and on and list at least 20 further epic blunders of modern physics that make it a “fake science”. At the same time present-day physics can be very easily revised and turned from fake science into true science when one first resolves the foundation crisis of mathematics as I have already done in 1995 with the development of

The New Integrated Physical and Mathematical Axiomatics of the Universal Law

With this theoretical foundation I was able to prove that all current distinct physical laws that make up for the confusing stuff of physical textbooks nowadays are derivations and partial applications of one Universal Law of Nature as this was postulated by Einstein (world field equation, Weltformel), H. Weyl (unified field theory), and many other prominent physicists between the two world wars.

Read here: The Universal Law of Nature

Herewith, I strongly recommend you to revise your knowledge on physics which is as false as this science is fake and start with the new introduction into the Theory of Science of the Universal Law which I have just published as an ebook:

An Easy Propaedeutics Into the New Physical and Mathematical Science of the Universal Law – ebook

After you have grasped the basic tenets of the new theory, you can proceed with my scientific books and articles on the new physical and mathematical theory of the Universal Law that reduces physics to applied mathematics:

- The New Integrated Physical and Mathematical Axiomatics of the Universal Law

- Volume II: The Universal Law. The General Theory of Physics and Cosmology (Full Version)

- Volume II: The Universal Law. The General Theory of Physics and Cosmology (Concise Version)

- Volume III: The General Theory of Biological Regulation. The Universal Law in Bio-Science and Medicine

Let me assure you, with my best intentions, that you have only two options:

1) Outright rejection of my proposal based on prejudices and inappropriate high-esteem which are but a manifestation of personal fears that lead to ignorance or

2) Show discernment, open mind and intellectual curiosity and make a leap in your understanding of Nature.

I have dealt with the first response on the part of conventional scientists for more than 20 years since I published my first book on the Universal Law in 1997 and I am not impressed at all by this kind of stubborn attitude that only afflicts the person that expresses it.

Besides I know beyond any doubt that this year of 2017 is the year of the introduction of the new theory of the Universal Law on a global scale and thus I am making you a great favour to inform you in advance.

Then with the breakthrough of the new theory of the Universal Law nothing will remain the same in science and your allegedly secure position in your scientific institution will be just as ephemeral as the secure election of Hillary Clinton with “more than 95% certainty” as was claimed by the fake MSM. Then believe me, there is no difference between the fake MSM, which with their obvious lies are currently in a free fall, and present-day fake physics and science which will also cease to exist in their present form within the blink of an eye in the course of this year. Exactly like the fake MSM narrative has collapsed within a few weeks before and after the election of Trump, notwithstanding the fact that it has controlled the opinion of the masses for decades, if not centuries. The parallels are striking and that should convince you that your current scientific position is untenable.

It is your choice to accept this unconditional offer of infinite cognitive value or reject it and stay blind for the rest of your life and I hope you make the right choice. In this case I am on your side to help you make this giant leap in human awareness and leave the current condition of cognitive blindness.

Finally I would like to make you aware of my proposal (official announcement) to the international scientific community from July 2014 that is still valid.

With best regards

Dr. Georgi Stankov

Addendum:

The same letter, somewhat modified to account for the specific modern history of Russia, was sent in Russian language to ca. 1000 Russian physicists and academicians.

“В вопросах науки авторитет тысячи не стоит самых простейших доводов одного” (Галилео Галилей).

Уважаемый коллега,

Современная физика, используя популярный современный термин, по существу, представляет из себя “ложную науку”, как и математика после 1931 года, когда знаменитый австрийский математик Курт Гёдель, вне всякого сомнения, показал в своей знаменитой теореме о неполноте, что математика, как герменевтическая дисциплина абстрактного человеческого мышления не может доказать свою действительность своими собственными способами (Über formal unentscheidbare Sätze der “Principia Mathematica” und verwandter Systeme). С тех пор математика, а вместе с ней и все известные естественные науки, которые используют математику как инструмент для описания природы с позиции естественных законов и математических моделей, существуют в знаменитом “Кризисе оснований математики (Grundlagenkrise der Mathematik)“.

Надеюсь, что вы, как теоретик, хорошо знакомы с этим фактом и учитываете его в своих исследованиях. Я говорю вам об этом, так как большинство ученых замели эту горькую правду большим веником под “ковер полного забвенья” и живут как невинные грешники в своей самодовольной иллюзии под названием «физика» и «человеческая наука».

Физика сегодня в больших неприятностях из-за несостоятельности Стандартной модели объяснить большинство наблюдаемых явлений. Она не способна интегрировать гравитацию с тремя другими фундаментальными силами, и теории тяготения, вдобавок, не существует вообще. Эта недостающая хорошо известна.

Я составил обзор основных направлений в исследовательской деятельности ок. 1000 представительных физиков во всем мире, как они представили это на персональных сайтах, и выяснил, что более 60% из них посвятили свои теоретические занятия попыткам усовершенствовать или заменить Стандартную модель, которая, по-прежнему, засчет инерции и отсутствия альтернативных жизнеспособных решений, считается верхушкой современной физики, включающей в себя классическую квантовую механику, КЭД и КХД с теорией относительности, без классической механики.

Это самое убедительное доказательство того, что Стандартная модель это “ложная наука” и ее нужно заменить, так как она ничего не объясняет. Очень воодушевляет то, что большинство физиков и ученых (теоретиков и математиков) понимают и принимают этот суровый и ошеломляющий факт.

Когда Нобелевский комитет вознаградил в 2015 году

Такааки Кадзита

Сотрудничество “Супер-Камиоканде”

Токийский университет, Касива, Япония

а также

Артура Б. Макдональда

Сотрудничество нейтринной обсерватории в Садбери

Королевский Университет, Кингстон, Канада

за их экспериментальную работу, показывающую возможность массы у нейтринов, он был вынужден признать, что:

“Открытие привело к далеко идущему выводу, что нейтрины, которые долгое время считались безмассовыми (?), должны иметь некоторую массу, хоть и малую.”

Для физики частиц это было историческим открытием. Ее Стандартная модель о глубинных работах материи до этого была невероятно успешной, выдерживая все экспериментальные вызовы на протяжении более двадцати лет. Однако, так как Стандартная модель полагается на отсутствие массы у нейтринов, ее нельзя соотнести с новыми наблюдениями, которые ясно показывают, что она не может быть полной теорией фундаментальных составляющих Вселенной” (для дополнительной информации читайте здесь).

Позвольте мне обобщить некоторые из величайших ошибок, до этого сделанных в физике, и разоблачить ее как “ложную науку”, потому что физики еще не осознали свою дисциплину как прикладную к физическому миру математику и не применили ее надлежащим образом к установленным аксиоматическим и формалистическим стандартам. Перед тем, как реформировать физику, нужно строго и в методологической манере применять принцип математического формализма, который был впервые представлен Гильбертом и привел путем знаменитого “Grundlagenstreit” (диспут об основаниях) между двумя мировыми войнами в Европе к неопровержимому доказательству Гёделя о недействительности математики и признанием кризиса оснований математики (закипевшим с начала XX века, после того, как Б. Рассел представил его знаменитые парадоксы (антиномии):

1) Ни фотоны, ни нейтрины безмассовыми частицами не являются. Физики провалились понять эпистемологическое определение массы, которое основано на математике и на самом деле является “энергетическим соотношением”. Все частицы и системы природы несут энергию, и потому массу (для дополнительной информации смотрите здесь).

2) Данное наблюдение устраняет нелепую концепцию “темной материи”, которая составляет 95% от общей массы Вселенной согласно текущей Стандартной модели в космологии – еще одно воплощение “ложной науки”, что было темой недавного диспута об инфляционной модели и непричастности этой модели к настоящей науке (читайте здесь). 95% недостающей материи представляет собой массу фотонового пространства-времени, которое сейчас считается “безмассовым”. Я показал, как очень легко рассчитать массу фотонов и после этого подсчитать массу материи, начиная с химических элементов (читайте здесь и Таблицу 1).

Таким образом, можно легко интегрировать гравитацию с другими тремя фундаментальными силами и впервые объяснить механизм тяготения, объединив классическую механику с электромагнетизмом и квантовой механикой, одновременно исключив эзотерический поиск гипотетического гравитона, что еще одна грандиозная ошибка в физике (читайте здесь).

3) “Заряда” не существует. Когда известное на сегодня определение заряда записано верным математическим путем, чего физики не делали на протяжении четырех столетий (вообще-то, с времен Античности), с момента открытия электричества, легко показать, что заряд это синоним,плеоназм “геометрической площади”, а единица системы СИ 1 куломбэквивалентна 1 квадратному метру. Непростительный недочёт!

Read here: The Greatest Blunder of Science: „Electric Charge“ is a Synonym for „Geometric Area“

И я могу продолжать до бесконечности, и перечислить еще как минимум 20 грандиозных ошибок современной физики, которые делают из нее “ложную науку”. В то же время современную физику можно очень легко пересмотреть и превратить из ложной науки в истинную, если сначала разрешить фундаментальный кризис математики, как я это уже сделал в 1995 году:

The New Integrated Physical and Mathematical Axiomatics of the Universal Law

С этим теоретическим основанием я смог доказать, что все текущие и отдельные физические законы, которые в наши дни относятся к запутанному материалу в учебниках по физике, являются производными и частичными приложениями единого Универсального закона природы, что постулировалось Эйнштейном (мировым полевым уравнением, “Weltformel”) , Г. Вейлем (единой теорией поля) и многими другими состоявшимися физиками между двумя мировыми войнами.

Читайте здесь: The Universal Law of Nature

Настоящим я настоятельно рекомендую вам пересмотреть свои знания о физике, которые ложны, как ложна и эта наука – и начать с нового введения в Теорию Науки Универсального закона, которую я только что опубликовал в электронном виде:

An Easy Propaedeutics Into the New Physical and Mathematical Science of the Universal Law – ebook

Я также написал специальное популярное введение в новую теорию Всеобщего закона и ее последствия для науки, техники и общества на русском языке, которые помогут вам лучше понять масштабы этого революционного открытия:

Universalnii (Vseobshchii) zakon. Kratkoe vvedenie v obshchniu teoriiu nauki i vliijanie eio na obshtestvo

После того, как вы осознали основные принципы новой теории, вы можете приступить к изучению моих научных книг и статей про новую физико-математическую теорию Универсального закона, которая “сокращает” физику до прикладной математики:

The New Integrated Physical and Mathematical Axiomatics of the Universal Law

Volume II: The Universal Law. The General Theory of Physics and Cosmology (Full Version)

Volume II: The Universal Law. The General Theory of Physics and Cosmology (Concise Version)

Позвольте мне заверить вас, из лучших побуждений, что выбора у вас только 2:

1) Открытое опровержение моего предложения, основанное на предрассудках и нецелесообразной завышенной самооценке, что лишь проявление личных страхов, ведущих к невежеству;

2) Проявление проницательности, открытости и интеллектуальной любознательности, и совершение скачка в своем понимании Природы.

Мне приходилось иметь дело с первым ответом традиционных ученых вот уже более 20 лет, с тех пор, как я опубликовал свою первую книгу по Универсальному закону в 1997 году, и я далеко не впечатлен таким упрямым отношением, от которого страдает лишь сам человек, который его выражает.

Кроме того, я уверен, вне всякого сомнения, что этот 2017 год станет годом введения новой теории Универсального закона на глобальном уровне, и поэтому оказываю вам большую услугу, сообщая об этом заранее.

Затем с прорывом Новой Теории Универсального Закона в науке ничто не останется прежним, и ваша якобы надежная позиция в вашем научном учреждении станет такой же эфемерной, как и гарантированное избрание Хиллари Клинтон с «вероятностью более 95%», как утверждали поддельные центральные СМИ на западе (поэтому я преимущественно читаю RT, Sputnik и другие российские издания). И тогда, поверьте мне, сотрется разница между поддельными западными СМИ, которые со своей очевидной ложью сейчас в “свободном падении”, и современными ложными физикой и наукой, которые тоже, в их нынешней форме в мгновение ока перестанут существовать в этом году. Именно так же, как поддельный рассказ западных СМИ рухнул в течение нескольких недель до и после избрания Трампа, невзирая на то, что они контролировали мнение масс десятилетиями, если не веками, а вместе с этим и поддельную легитимность всех созданных политических структур на западе, таких как НАТО и ЕС. Это будет очень похоже на крах Советского Союза, который я испытал на себе во время визитов в Москву, в начале 90-х, где мне довелось провести клинические исследования с РАН. Эти параллели поразительны, и это должно убедить вас, что ваш нынешний научный подход находится в уязвимом положении, и что вы не должны повторять те же ошибки, что и номенклатура в 90-х годах. Ведь именно Горбачев сказал, что “история наказывает тех, кто опаздывает”, и вместе с этой фразой призвал падение Берлинской стены и железного занавеса … и новую эру свободной и суверенной России на руинах Советского Союза, который до этого также следовал фальшивой доктрине.

Что сейчас самое важное, так это принятие русскими учеными истинной традиции континентальной Европы – Евклидовой аксиоматизации науки – и “неуступление” ныне ошибочному Англо-Саксонскому господству нецелостного, разделяющего все на несвязные части научного мышления. Русские ученые должны полностью признать логическое аксиоматическое мышление в математике, вклад в которое они решительно вносили в прошлом, и продолжают вносить сейчас.

Это ваш выбор – принять мое безоговорочное предложение, несущее необъемлемую ценность для сознания, или отвергнуть его, и остаться слепыми на всю оставшуюся жизнь, – и я надеюсь, что вы выберете правильно. В таком случае я на вашей стороне, чтобы помочь вам совершить этот гигантский скачок в сознании человека и покинуть текущее состояние “когнитивной слепоты”.

Под конец, я бы хотел вас проинформировать о своем предложении (официальном объявлении) международному научному сообществу с июля 2014 года, которое все еще в силе.

С наилучшими пожеланиями,

Доктор Георгий Станков

Ванкувер, Канада.

Later on I sent this open letter to the Russian scientists to the Russian President Vladimir Putin in the Kremlin to inform him about this new development. I met with him in the dream state a week later where he disproved, at the soul level, the lack of response from the Russian scientists who missed this great opportunity for Russia.

Президенту России Владимиру Путину,

Уважаемый господин,

в июне 2017 года я отправил открытое письмо более чем 1000 известным российским физикам, объявившим о величайшем научном открытии в истории человечества – открытии Всеобщего закона и разработке новой физико-математической теории науки. Я сделал это как друг русских людей, являющихся нашими славянскими братьями, которых мы обязаны существованию нашего болгарского государства и нации. Я приложил это письмо в качестве документа к этому письму.

Реализация этого открытия вызовет величайшую революцию человечества, и те нации, которые полностью ее поймут, станут победителями в истории человечества. Вы недавно подчеркнули важность искусственного интеллекта (AI) в одном из своих выступлений и заявили, что победителями станут те страны, которые будут развивать ИИ. Истинный ИИ может быть разработан только учеными, которые понимают новую физико-математическую теорию Всеобщего закона, в этом не должно быть никаких сомнений.

К сожалению, российские физики не отреагировали на это щедрое предложение с моей стороны. Для этого есть много причин, но все они не имеют научного, теоретического характера, но основаны на их страхах, чтобы противостоять неприкрашенной научной истине. Поэтому я хотел бы предложить вам выступить перед учеными и экспертами, чтобы доказать новую теорию Всеобщего закона и сделать свой вердикт. Когда они подтвердят его обоснованность – и другого результата не будет – я готов приехать в Россию и работать с Российской академией наук для ее полного осуществления. Это приведет к величайшему возрождению русской и славянской культуры, и вы приобретете бессмертное место в истории человечества в качестве своего политического покровителя. Очевидно, нам нужен дальновидный и неукротимый человек, подобный вам, чтобы убедить научное сообщество принять научную истину, поскольку прямое общение с российскими учеными было затруднено их глубоко укоренившимися страхами, чтобы потерять свое профессиональное положение и, таким образом, оказать плохую услугу великой русской нации.

С наилучшими пожеланиями

Д-р Георгий Станков

Foreword

While the numbers of the first two groups of people addressed in the title are asymptotically approaching the zero value in the current End Time, the number of the third, much larger group of humans will rapidly rise in the coming days during the profound change and transformation of this planet and humanity. This is the target group of the current propaedeutics into the new revolutionary Theory of Science of the Universal Law that will be the vehicle for this transformation of mankind. This group of people will become the wayshowers of the new humanity and custodians of the new ascended Gaia in all eternity.

There is no doubt that the new scientific theory of the Universal Law, as presented in its totality on this website, encompasses the entire bandwidth of all fundamental scientific, social, economic, psychological, political, gnostic and philosophical aspects and topics with which humanity has dealt throughout its long and not so glorious history in order to survive. For this reason the new science of the Universal Law will very soon become the dominant Weltanschauung (world view) of the new evolved humanity and thus a cornucopia of new revolutionary, higher-dimensional technologies that will bring infinite prosperity to all humans.

This is the divine plan of the Source for this earth and its human population and it is already a reality in all simultaneously existing upper 4D and 5D earths which we, the PAT (the Planetary Ascension Team of Gaia and humanity) and myself who had the privilege to be its captain, have been creating for a very long time.

The new theory of the Universal Law is a gift of Godhead to humanity on the verge of its glorious ascension when the end of the old dreadful era of Orion oppression meets the new beginning of the new era of enlightenment, peace, freedom and prosperity.

As all evil in this reality stems from the spiritual ignorance of the incarnated human personalities, it can be very easily eradicated when the new axiomatic scientific theory of the Universal Law based on the unity of All-That-Is is fully implemented and understood by all the people. This will streamline the collective consciousness in a yet unknown, revolutionary manner while stimulating at the same time the individual creationary potential of each and every human being. This will lead to infinite prosperity, bliss, happiness and human progress for the new mankind that will rapidly evolve to a multidimensional, transliminal, transgalactic civilisation.

The pathway to this magnificent end can only go through a full comprehension and implementation of the new theory of the Universal Law.

This publication as an ebook will remain on the front page for a further 30 days. During this time I would humbly ask all my readers and members of the PAT to send everyday at least one email with a link of this publication – and the more, the better – to any person, scientist, institution or website on the Internet that is deemed to profit from this new knowledge and perspires a modicum of genuine desire to expand his/her/its awareness. In this way we shall trigger an energetic avalanche that will usher the new era of enlightenment for mankind.

I thank, from the bottom of my heart, all my readers and the PAT for your indomitable and faithful support throughout all the years and for your participation in this, hopefully, final effort of cosmic proportions which we must perform in order to trigger the ultimate ascension leap and transmutation of Gaia and humanity in the current End Time. After all we are the ones who create and fuel the planetary ascension process guided by the Universal Law of All-That-Is.

Content

(All chapters in this book can be also found as separate publications on this website by clicking on the link.)

Introduction: The Universal Law of Nature

I. Space-Time = Energy Has only Two Dimensions (Constituents) – Space and Time

- I.1. Essay: Systems of Measurements and Units in Physics (Part 1)

- I.2. Mass and Mind: Why Mass Does Not Exist – It Is an Energy Relationship and a Dimensionless Number (Part 2)

- I.3. Mass, Matter and Photons – How to Calculate the Mass of Matter From the Mass of Photon Space-Time (Part 3)

- I.4. What is Temperature? (Part 4)

- I.5. The Greatest Blunder of Science: „Electric Charge“ is a Synonym for „Geometric Area“. Its fundamental SI Unit „Coulomb“ is a Synonym for„Square Meter“ (Part 5)

- I.6. Galilei’s Famous Experiment of Gravitation Assesses the Universal Law with the Pythagorean Theorem

- I.7. Why the Pythagorean Theorem Is in the Core of the Current Geometric Presentation of Most Physical Laws

- 1.8. Doppler Effect Is the Universal Proof for the Reciprocity of Space and Time

- 1.9. The Mechanism of Gravitation – for the First Time Explained

- 1.10. How to Calculate the Mass of Neutrinos?

II. Wrong Space-Time Concepts of Conventional Physics and Their Revision in the Light of the New Axiomatics of the Universal Law

- II.1. Space-Time Concept in Classical Physics

- II.2. The Concept of Relativity in Electromagnetism

- II.3. The Space-Time Concept of the Special and General Theory of Relativity

- II.4. The End of Einstein’s Theory of Relativity – It Is Applied Statistics For the Space-Time of the Physical World

III: Why Modern Cosmology Is a Fake Science

- III.1. Modern Cosmology Revised in the Light of the Universal Law – a Critical Survey

- III.2. Hubble’s Law Is an Application of the Universal Law for the Visible Universe

- III.3. The Cosmological Outlook of Traditional Physics in the Light of the Universal Law

- III.4. The Role of the CBR-Constant in Cosmology

- III.5. Pitfalls in the Interpretation of Redshifts in Failed Present-Day Cosmology

- III.6. What Do “Planck’s Parameters of the Big Bang“ Really Mean?

- III.7. The “Big Bang” Is Yet to Come in the Empty Brain Cavities of the Cosmologists – Two PAT Opinions

Further Basic Literature on the Universal Law:

- The New Integrated Physical and Mathematical Axiomatics of the Universal Law

- Volume II: The Universal Law. The General Theory of Physics and Cosmology (Full Version)

- Volume II: The Universal Law. The General Theory of Physics and Cosmology (Concise Version)

- Volume II: The Universal Law. The General Theory of Physics and Cosmology in Bulgarian (full version): Tom II: Universalnijat Zakon. Obschta Teoria po Fizika i Kosmologija)

- Volume III: The General Theory of Biological Regulation. The Universal Law in Bio-Science and Medicine

Introduction: The Universal Law of Nature

Scientific definition

Conventional science has not yet discovered a single law of Nature, with which all natural phenomena can be assessed without exception. Such a law should be defined as “universal”. Based on sound, self-evident scientific principles and facts, the current article analyses, from the viewpoint of the methodology of science, the formal theoretical criteria, which a natural law should fulfill in order to acquire the status of a “Universal Law”

Current concepts

In science, some known natural laws, such as Newton’s law of gravitation, are referred to as “universal”, e.g. “universal law of gravitation”. This term implies that this particular law is valid for the whole universe independently of space and time, although these physical dimensions are subjected to relativistic changes as assessed in the theory of relativity (e.g. by Lorentz’ transformations).

The same holds true for all known physical laws in modern physics, including Newton’s three laws of classical mechanics, Kepler’s laws on the rotation of planets, various laws on the behaviour of gases, fluids, and levers, the first law of thermodynamics on the conservation of energy, the second law of thermodynamics on growing entropy, diverse laws of radiation, numerous laws of electrostatics, electrodynamics, electricity, and magnetism, (summarised in Maxwell’s four equations of electromagnetism), laws of wave theory, Einstein’s famous law on the equivalence of mass and energy, Schrödinger’s wave equation of quantum mechanics, and so on. Modern textbooks of physics contain more than a hundred distinct laws, all of them being considered to be of universal character.

According to current physical theory, Nature – in fact, only inorganic, physical matter – seems to obey numerous laws, which are of universal character, e.g. they hold true at any place and time in the universe, and operate simultaneously and in a perfect harmony with each other, so that human mind perceives Nature as an ordered Whole.

Empirical science, conducted as experimental research, seems to confirm the universal validity of these physical laws without exception. For this purpose, all physical laws are presented as mathematical equations. Laws of Nature, expressed without the means of mathematics, are unthinkable in the context of present-day science. Any true, natural law should be empirically verified by precise measurements, before it acquires the status of a universal physical law. All measurements in science are based on mathematics, e.g. as various units of the SI-System, which are defined as numerical relationships within mathematics, and only then derived as mathematical results from experimental measurements. Without the possibility of presenting a natural law as a mathematical equation, there is no possibility of objectively proving its universal validity under experimental conditions.

State-of-the-art in science

From the above elaboration we can conclude that the term “Universal Law”, should be applied only to laws that can be presented by means of mathematics and verified without exception in experimental research. This is the only valid “proof of existence” (Existenzbeweis, Dedekind) of an “universal law” in science from a cognitive and epistemological point of view.

Until now, only the known physical laws fulfill the criterion to be universally valid within the physical universe and at the same time to be independent of the fallacies of human thinking at the individual and collective level. For instance, the universal gravitational constant G in Newton’s law of gravitation, is valid at any place in the physical universe. The gravitational acceleration of the earth g, also a basic constant of Newton’s laws of gravitation, applies only to our planet – therefore, this constant is not universal. Physical laws which contain such constants are local laws and not universal.

It is important to observe that science has discovered universal laws only for the physical world, defined as inanimate matter, and has failed to establish such laws for the regulation of organic matter. Bio-science and medicine are still not in the position to formulate similar universal laws for the functioning of biological organisms in general and for the human organism in particular. This is a well-known fact that discredits these disciplines as exact scientific studies.

The various bio-sciences, such as biology, biochemistry, genetics, medicine – with the notable exception of physiology, where the action potentials of cells, such as neurons and muscle cells, are described by the laws of electromagnetism – are entirely descriptive, non-mathematical disciplines. This is basic methodology of science which should be cogent to any specialist.

This conclusion holds true independently of the fact that scientists have introduced numerous mathematical models in various fields of bio-science, with which they experiment in an excessive way. Until now they have failed to show that such models are universally valid.

The general impression among scientists today is that organic matter is not subjected to similar universal laws as observed for physical matter. This observation makes, according to their conviction, for the difference between organic and inorganic matter.

The inability of scientists to establish universal laws in biological matter may be due to the fact that:

a) such laws do not exist or

b) they exist, but are so complicated, that they are beyond the cognitive capacity of mortal human minds.

The latter hypothesis has given birth to the religious notion of the existence of divine universal laws, by which God or a higher consciousness has created Nature and Life on earth and regulates them in an incessant, invisible manner.

These considerations do not take into account the fact that there is no principal difference between inorganic and organic matter. Biological organisms are, to a large extent, composed of inorganic substances. Organic molecules, such as proteins, fatty acids, and carbohydrates, contain for instance only inorganic elements, for which the above mentioned physical laws apply. Therefore, they should also apply to organic matter, otherwise they will not be universal. This simple and self-evident fact has been grossly neglected in modern scientific theory.

The discrimination between inorganic and organic matter – between physics and bio-science – is therefore artificial and exclusively based on didactic considerations. This artificial separation of scientific disciplines has emerged historically with the progress of scientific knowledge in the various fields of experimental research in the last four centuries since Descartes and Galilei founded modern science (mathematics and physics). This dichotomy has its roots in modern empiricism and contradicts the theoretical insight and the overwhelming experimental evidence that Nature – be it organic or inorganic – operates as an interrelated, harmonious entity.

Formal scientific criteria for a “Universal Law”

From this disquisition, we can easily define the fundamental theoretical criteria, which a natural law must fulfill in order to be called “Universal Law”. These are:

1. The Law must hold true for inorganic and organic matter.

2. The Law must be presented in a mathematical way, e.g. as a mathematical equation because all known physical laws are mathematical equations.

3. The Law must be empirically verified without exception by all natural phenomena.

4. The Law must integrate all known physical laws, that is to say, they must be derived mathematically from this Universal Law and must be ontologically explained by it. In this case, all known physical laws are mathematical applications of one single Law of Nature.

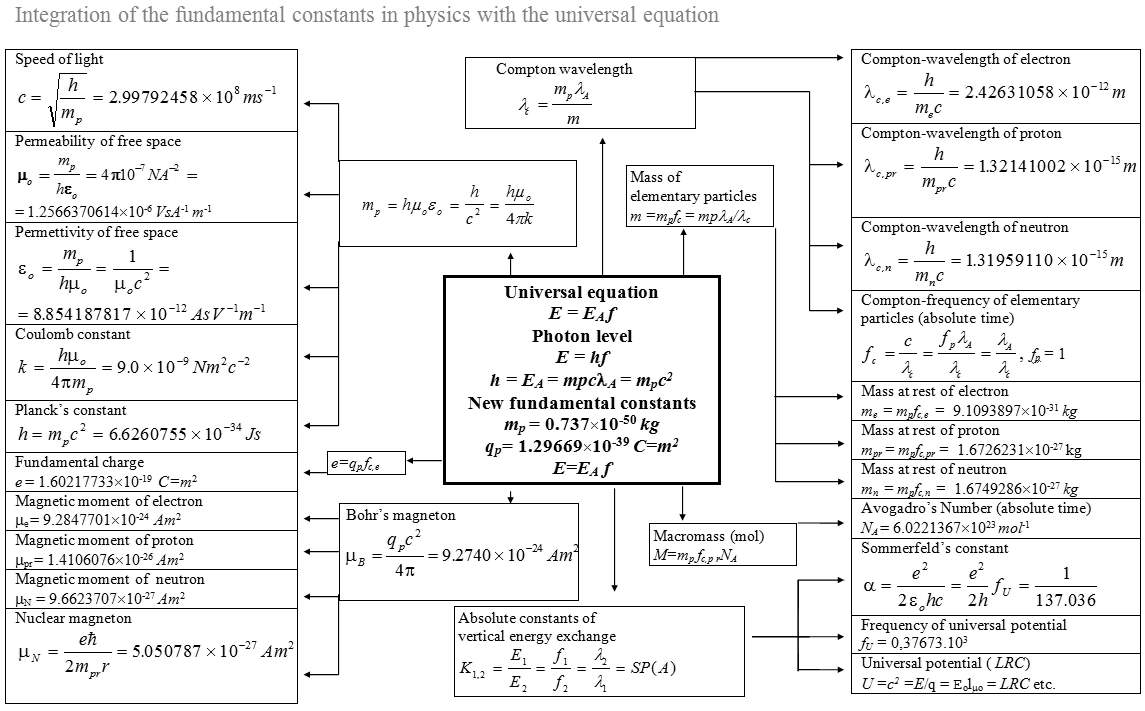

5. Alternatively, one has to prove that all known fundamental natural constants in physics, which pertain to numerous distinct physical laws are interrelated and can be derived from each other. This will be a powerful mathematical and physical evidence for the unity of Nature under one Universal Law, as all these constants can be experimentally measured by means of mathematical equations.

In this way one can integrate for the first time gravitation with the other three fundamental forces (see below) and ultimately unify physics. Until now conventional physics, which stipulates in the standard model, cannot integrate gravitation with the other three fundamental forces. This is a well-known fact among physicists and this circumstance discredits the whole edifice of this natural science. Physics is unable to explain the unity of Nature. This fact is not well understood by all people nowadays, because it is deliberately neglected or even covered up by all theoreticians.

The unification of physics has been the dream of many prominent physicists such as Einstein, who introduced the notion of the universal field equation, also known as “Weltformel” (world equation) or H. Weyl, who believed physics can be developed to a universal field theory.

This idea has been carried forward in such modern concepts as Grand Unified Theories (GUTs), theories of everything or string theories, however, without any feasible success.

If such a law can be discovered, it will lead automatically to the unification of physics and all natural sciences to a “General Theory of Science”.

At present, physics cannot be unified. Gravitation cannot be integrated with the other three fundamental forces in the standard model, and there is no theory of gravitation at all. Newton’s laws of gravitation describe precisely motion and gravitational forces between two interacting mass objects, but they give us no explanation as to how gravitation is exerted as an “action at a distance”, also called “long-range correlation”, or what role photons play in the transmission of gravitational forces, given the fact that gravitation is propagated with the speed of light, which is actually the speed of photons.

If this hypothetical “Universal Law” also holds true for the organisation of human society and for the functioning of human thinking, then we are allowed to speak of a true “Universal Law”. The discovery of such a law will lead to the unification of all sciences to a pan-theory of human knowledge. This universal theory will be, in its verbal form presented as a categorical system (Aristotle), without contradictions, that is to say, it will follow the formalistic principle of inner consistency.

From a mathematical point of view, the new General Theory of Science, based on the Universal Law, will be organised as an axiomatics. The potential axiomatisation of all sciences will be thus based on the “Universal Law” or a definition thereof. This will be the first and only axiom, from which all other laws, definitions, and conclusions will be derived in a logical and consistent way. All these theoretical statements will then be confirmed in an experimental manner.

These are the ideal theoretical and formalistic criteria, which a “Universal Law” must fulfill. The new General Theory of Science based on such an “Universal Law” will be thus entirely mathematical, because the very Law is of mathematical origin – it has to be presented as a mathematical equation.

In this case all natural and social sciences can be principally presented as mathematical systems for their particular object of investigation, just as physics today is essentially an applied mathematics for the physical world. Exact sciences are therefore “exact”, because they are presented as mathematical systems.

The foundation crisis of mathematics

(see Wikipedia: Grundlagenkrise der Mathematik)

This methodological approach must solve one fundamental theoretical problem that torments modern theory of science. This problem is well-known as the “Foundation Crisis of mathematics“. Mathematics cannot prove its validity with its own means. As mathematics is the universal tool of presenting Nature in all exact physical disciplines, the Foundation Crisis of mathematics extends to all natural sciences. Social sciences do not claim any universal validity, as they cannot be mathematically expressed. Therefore, the Foundation Crisis of mathematics is the Crisis of Science.

Although this crisis should be basic knowledge to any scientist or theoretician, present-day scientists are completely unaware of its existence. Hence their total agnosticism with respect to the essence of Nature.

This ignorance is difficult to explain, as the foundation dispute in mathematics, known in German as Grundlagenstreit der Mathematik, has dominated the spirits of European mathematicians during the first half of the 20th century. The current ignorance of scientists about this crisis of science stems from the fact that mathematicians have not yet been able to solve the foundation crisis of mathematics and have swept it with a large broom under the carpet of total forgetfulness.

Mathematics is a hermeneutic discipline and has no external object of study. All mathematical concepts are “objects of thought” (Gedankendinge). Their validity cannot be verified in the external world, as this is the case with physical laws. Mathematics can only prove its validity by its own means.

This insight emerged at the end of the 19th century and was formulated for the first time as a theoretical programme by Hilbert in 1900. By this time, most of the mathematicians recognized the necessity of unifying the theory of mathematics through its complete axiomatisation. This was called “Hilbert’s formalism“. Hilbert, himself, made an effort to axiomatize geometry on the basis of few elementary concepts, such as straight line, point, etc., which he introduced in an apriori manner.

The partial axiomatisation of mathematics gained momentum in the first three decades of the 20th century, until the Austrian mathematician Gödel proved in 1931 in his famous theorem that mathematics cannot prove its validity by mathematical, axiomatic means. He showed in an irrevocable manner, that each time, Hilbert’s formalistic principle of inner consistency and lack of contradiction is applied to the system of mathematics – be it geometry or algebra – it inevitably leads to a basic antinomy (paradox). This term was first introduced by Russell, who challenged Cantor’s theory of sets, the basis of modern mathematics. Gödel showed by logical means that any axiomatic approach in mathematics inevitably leads to two opposite, excluding results.

The continuum hypothesis

See also: Continuum hypothesis

Until now, no one has been able to disprove Gödel’s theorem, which he further elaborated in 1937. With this theorem the foundation crisis of mathematics began and is still ongoing as embodied in the Continuum hypothesis, notwithstanding the fact, that all mathematicians after Gödel prefer to ignore it. On the other hand, mathematics seems to render valid results, when it is applied to the physical world in form of natural laws.

This observation leads to the only possible conclusion.

The discovery of the “Universal Law”

The solution of the continuum hypothesis and the elimination of the foundation crisis of mathematics can only be achieved in the real physical world and not in the hermeneutic, mental space of mathematical concepts. This is the only possible “proof of existence” that can eliminate the Foundation Crisis of mathematics and abolish the current antinomy between its validity in physics and its inability to prove the same in its own realm.

The new axiomatics that will emerge from this intellectual endeavour will no longer be purely mathematical, but will be physical and mathematical at once. Such an axiomatics can only be based on the discovery of the “Universal Law”, the latter being at once the origin of physics and mathematics. In this case, the “Universal Law” will be the first and only primary axiom, from which all scientific terms, natural laws and various other concepts in science will be axiomatically, i.e. consistently and without any inner contradiction, derived. Such axiomatics is rooted in experience and will be confirmed by all natural phenomena without exception. This axiomatics is the foundation of the General Theory of Science, which the author developed after he discovered the Universal Law of Nature in 1994.

References:

- Dr. Georgi Stankov, Stankov’s Universal Law Press

- Tipler, PA. Physics for Scientists and Engineers,1991, New York, Worth Publishers, Inc.

- Feynman, RP. The Feynman Lectures on Physics, 1963, California Institute of Technology.

- Peeble, PJE. Principles of Physical Cosmology, 1993, Princeton, Princeton University Press.

- Berne, RM & Levy MN, Physiology, St. Louis, Mosby-Year Book, Inc.

- Bourbaki, N. Elements of the History of Mathematics, 1994, Heidelberg, Springer Verlag.

- Davis, P. Superstrings. A Theory of Everything?, 1988, Cambridge, Cambridge University Press.

- Weyl, H. Philosophie der Mathematik und Naturwissenschaft, 1990, München, Oldenbourg Verlag.

- Barrow, JD. Theories of Everything. The Quest for Ultimate Explanation, 1991, Oxford, Oxford University Press.

- Stankov, G. Das Universalgesetz. Band I: Vom Universalgesetz zur Allgemeinen Theorie der Physik und Wissenschaft,1997, Plovidiv, München, Stankov’s Universal Law Press.

- Stankov, G. The Universal Law. Vol.II: The General Theory of Physics and Cosmology, 1999, Stankov’s Universal Law Press, Internet Publishing 2000.

- Stankov, G. The General Theory of Biological Regulation. The Universal Law in Bio-Science and Medicine, Vol.III, 1999, Stankov’s Universal Law Press, Internet Publishing 2000.

I. Space-Time = Energy Has only Two Dimensions (Constituents) – Space and Time

I.1. Systems of Measurements and Units in Physics (Part 1)

“The laws of physics express relationships between physical quantities, such as length, time, force, energy and temperature. Thus, the ability to define such quantities precisely and measure them accurately is a requisite of physics. The measurement of any physical quantity involves comparing it with some precisely defined unit value of the quantity.“ (1)

This is the departing point of any intellectual effort in physics. In this essay I shall explain why the “ability to define“ physical quantities appears to be the “Achilles heel“ of modern physics.

I shall also explain why physicists have failed to grasp that energy = space-time = All-That-Is, which is the very object of their science, has only two dimensions – space and time – and not six fundamental dimensions as they currently claim referring to the SI system. This is the third biggest blunder in physics that is closely linked to their inability to understand epistemologically their own definition of mass as energy relationship which is a dimensionless number. This will be the topic of my next publication. The second one is to confound the basic physical quantity of electromagnetism and quantum mechanics, charge, which is in fact a synonym (pleonasm) of geometric area. This blunder has been thoroughly revealed in my pivotal publication:

The Greatest Blunder of Science: „Electric Charge“ is a Synonym for „Geometric Area“.

which I will present in a simple popular-scientific version later on for the sake of completion of my discussion on all scientists’ blunders in physics and related disciplines.

In many ways, the new Physical and Mathematical Axiomatics and Theory of the Universal Law is a painstaking forensic exploration of the infinite blunders physicists and theoreticians have accumulated in less than four centuries since Galileo Galilei conducted his famous experiment on gravitation and laid the foundation of this natural science. Let us begin our methodological forensics with the epistemological background of the SI system which is in the core of this experimental discipline as not a single experiment can be conducted in physics without employing this system of basic SI units and physical quantities.

Everybody with a modicum of physical knowledge should know that the mathematical (symbolic) expression of any physical quantity consists of a number, which is a relationship between the magnitude of the assessed quantity and the arbitrarily chosen unit for this quantity, and the name of the unit. If a distance, e.g. the length of a soccer field, is 100 times longer than 1 metre (length unit of choice), we write for it “100 metres“. The magnitude of any physical quantity includes both a number and a unit. This presentation is a pure convention.

All physical quantities can be expressed in terms of a small number of fundamental quantities and units. Most of the quantities in physics are composed quantities within mathematical formalism. This is generally acknowledged. For example, speed is expressed as a relationship of a unit of length (metre) and a unit of conventional time (second) v=s/t (m/s).

The most common physical quantities, such as force, momentum, work, energy and power, which are basic to many physical laws, can be expressed with only three fundamental quantities – length, conventional time and mass. The set of all standard units in physics is called “Système Internationale“ or SI system. It consists of a few basic quantities and their corresponding units, from which all other quantities and units can be derived by applying the method of mathematical formalism (method of definition = method of measurement). These are:

- (1) length (metre),

- (2) conventional time (second),

- (3) mass (kilogram),

- (4) temperature (kelvin),

- (5) amount of substance, also called “the mole“ (mol),

- (6) current (ampere) and

- (7) charge (coulomb) (2).

The last two quantities are defined in a circular manner, so that they can be regarded as one quantity.

A major objective of this disquisition is to present theoretical and experimental evidence that these six fundamental quantities are axiomatically derived from the two constituents of space-time – space and time. I will begin with the first two quantities in this essay and will discuss the other four in follow-up publications. As all the other conventional quantities used in physics are known to be derivatives of these few quantities, this is also true for any new physical quantity.

This essay will render the fundamental proof that space-time has only two constituents, quantities, dimensions (synonyms) – space and time. This proof brings about the greatest simplification in modern physics which is now fragmentalized by inadequate definitions the epistemology of which has never been truly worked out in an axiomatic and logical manner. This I define in the new theory of the Universal Law as “applied mathematical formalism” which is another word for the new Integrated Physical and Mathematical Axiomatics of the Universal Law.

By way of introduction, we begin with the definition of the SI units of space and conventional time, metre and second. The definition of these quantities is at the same time the method of measurement of their units, which is applied mathematics and/or geometry. The standard unit of length ([1d-space]-quantity), 1 metre (1 m), was originally indicated by two scratches on a bar made of platinum-iridium alloy kept at the International Bureau of Weights and Measures in Sèvres, France.

This is, however, an indirect system (a surrogate) of standard length. The actual system of comparison is the arbitrarily chosen distance between the equator and the North Pole along the meridian through Paris, which is roughly 10 million metres. Thus the earth is the initial, real reference system of distance – the metre is an anthropocentric surrogate.

As this gravitational system of reference length was found to be inexact, the standard metre is now arbitrarily defined with respect to the speed of light. This quantity is defined in the new Axiomatics of the Universal Law as [1d-space-time] of the photon level: it is the distance travelled by light in empty (?) space during a time of 1/299,792,458 second. This makes the velocity of the photon level c = 299,792,458 m/s. The photon level, of which the visible light is a narrow spectrum (a system), has a constant velocity c.

This has been deduced in the new Axiomatics from the primary term of human consciousness – energy = space-time = All-That-Is – and confirmed by the theory of relativity and physical experience. The universal property of all levels of space-time – their constant specific velocity, also presented as a specific action potential EA being the universal manifestation of energy exchange – is intuitively considered in the conventional definition of the SI unit of length, 1 metre. So far, this fact has not been comprehended by all theoreticians.

Through the standard definition of space and conventional time (see below), the velocity of the photon level is voluntarily selected as the universal reference system of space-time, to which all other physical systems are set in relation (method of measurement).

The standard definition of the length unit reveals a fundamental epistemological fact that has entirely evaded the attention of physicists. The present standard definition of 1 metre by using the speed of light gives the impression of being clear-cut and unambiguous. In fact, this is not the case. The definition of this length unit is based on the principle of circular argument and involves the definition of the time unit, 1 second. If the latter unit could be defined in an a priori manner, all would be well.

When we look at the present definition of the second, which is at the same time the only possible definition of the quantity “conventional time t“, we come to the conclusion that this is not possible. The standard unit of time, being originally defined as 1/60×1/60×1/24 of the mean solar day, is now defined through the frequency of the photons emitted during a certain energy transition within the caesium atom, which is f = 9,192,631,770 per second.

In this case, we have again a concrete photon system with a more or less constant frequency, which has been arbitrarily selected as a reference system of time measurement. From this real reference system of space-time, an anthropocentric surrogate – the clock with the basic unit of 1 second – has been introduced. The conventional time of all events under observation is then compared with the time of the clock. Thus the measurement of time in physics and daily life is in reality:

a comparison of the frequency of events that are observed with the frequency (periodicity) of a standard photon system.

The method of definition and measurement of the quantity “conventional time t“ and its unit, 1 second, is therefore a circular comparison of actual periodicities. Such quantities are pure (dimensionless) numbers that belong to SP(A) (for further information see here). However, any experimental measurement of photon frequency involves the measurement of length – the actual quantity of time cannot be separated from the measurement of the wavelength λ, which is an actual [1d-space]-quantity.

Therefore, the two constituents of space-time cannot be separated in real terms because they are canonically conjugated. The equation of the speed of light c = λ f is intrinsic to any measurement of photon frequency and wavelength. Neither wavelength, nor frequency, can be regarded as a distinct entity – they both behave reciprocally and can only be expressed in terms of space-time:

c = λ f = [1d-space] f = [1d-space-time]p

The wavelength and frequency of photons are the actual quantities of the two constituents, space and time, of this particular level of space-time. The measurement of any particular length [1d-space] or time f = 1/t in the physical world is, in fact, an indirect comparison with the actual quantities of space and time of a photon system of reference. The introduction of the SI system obscures this fact.

We conclude:

The one-dimensional space-time of the photon level [1d-space-time]p is the universal reference system of length s = [1d-space] and conventional time t = 1/f, and their units, 1 metre and 1 second. The SI system is an anthropocentric surrogate of this real reference system and can be easily eliminated. In fact, it should be eliminated in theoretical physics as it only obscures the understanding of energy = space-time = physical world = All-That-Is. This is done in the new Physical and Mathematical Theory of the Universal Law.

This conclusion is of immense importance – I have shown in Volume II that the theory of relativity uses the same intrinsic reference system to assess relativistic space and time of kinetic objects. Lorentz transformations, with which these quantities are presented, are relationships (quotients) of the space-time of the object in motion as assessed by v with the space-time of the photon level as assessed by c. These are formalistic constructions within the system of mathematics. I have proved that these quotients belong to the probability set 0≤P(A)≤1 and can be expressed in terms of statistics as summarized in the new symbol SP(A).

From this survey, it becomes evident that the physical quantities, length and conventional time, and their basic units, metre and second, are defined in a circular manner by the arbitrary choice of a real reference system of space-time – in this particular case, of photon space-time. The SI system is an epiphenomenon; it is a human convention and can be substituted by any other system through the introduction of conversion factors or better eliminated. This also applies to the other four basic quantities and their units, which will be discussed in separate publications.

Therefore, the definition of any physical quantity cannot be separated from its method of measurement, which is mathematics. The latter is, at the same time, its method of definition. Physical quantities as defined in physics do not have a distinct existence in the real world, but are intrinsically linked to their mathematical definition, which is a product of abstract human consciousness. Mathematics is a hermeneutic discipline without any external object. As any Axiomatics is also a product of human consciousness, the derivation of all known physical quantities from the primary term is essentially a problem of correct organisation of physical and mathematical thinking and not a problem that should be resolved through explorative empiricism.

Thus every method of measurement and every definition of a physical quantity are based on the principle of circular argument. This epistemological result of our methodological analysis of physical concepts is of universal character. The explanation is very simple: as every physical quantity reflects the nature of space-time as a U-subset thereof, its definition has to comply with the principle of last equivalence of the primary term which postulates that all terms that assess the primary term are equivalent independently of the choice of the particular words.

This fundamental axiom of the new Axiomatics is intuitively perceived by the physicist’s mind and is put forward in all subsequent definitions of physical quantities. As these terms are of secondary character – they are parts of the Whole – the actual principle applied in physical definitions nowadays is circulus viciosus. The vicious character of this principle when applied to the parts and the simultaneous negligence of the primary term explains why the existence of the Universal Law has been overlooked in the past.

Physics has produced in a vicious circle a large number of concepts, which are either synonyms or partial perceptions of the primary term. Unfortunately, they have been erroneously regarded as distinct physical entities. This has given rise to the impression that these physical quantities really exist. In fact, they only exist as abstract concepts in the physicist’s mind and are introduced in experimental research through their method of measurement which is mathematics.

Space-time is termless – it is an a priori entity; the human mind, on the other hand, is a local, particular system of recent origin that has the propensity to perceive space-time and describe it in scientific terms. Science originally means „knowledge“, but it also includes the organisation of knowledge – every science is a categorical system based on the primary concept of space-time. Only the establishment of a self-consistent Axiomatics which departs from the primary term of space-time leads to an insight that there is only one Law of Nature and allows a correct organisation of human knowledge on the basis of present and future empiric data.

Notes:

1. Textbook on Physics, PA Tipler, p. 245 (I have used an earlier edition of this textbook, so that the pages may have changed. Note, George)

2. Some authors believe that candela (cd) is also a basic unit, but this is a mistake.

I.2. Mass and Mind: Why Mass Does Not Exist – It Is an Energy Relationship and a Dimensionless Number (Part 2)

Mass does not exist – it is an abstract term of our consciousness (object of thought) that is defined within mathematics. The origin of this term is energy (space-time).

Mass is a comparison of the space-time (energy) of any particular system Ex to the space-time of a reference system Er (e.g. 1 kg) that is performed under equal conditions (principle of circular argument): m = Ex / Er = SP(A), when g = constant, which is the case most of the time on this planet at the same altitude. When this comparison is done for gravitation, it is called “weighing”. The ratio that is built is a static relationship that does not consider energy exchange, although it is obtained from an energy interaction such as weighing. This explains the traditional presentation of mass as a scalar (for more information on scalars see here).

We can call the space-time of a reference system “1 kg“ or “1 space-time“ without changing anything in physics. In the new Axiomatics we ascribe mass for didactic purposes to the new term “structural complexity“ Ks . When f = 1,

m = Ks = SP(A)[2d-space] = SP(A).

In this case [2d-space] = SP(A) = 1 is regarded as a spaceless “centre of mass“ within geometry, which is a pure abstraction of the human mind as all real objects have a volume (3d-space) and therefore cannot be spaceless.

The definition of mass in classical mechanics is as follows:

“Mass is an intrinsic property of an object that measures its resistance to acceleration.“ (1)

The word “resistance“ is a circumlocution of reciprocity: m ≈ 1/a. This definition creates a vicious circle with the definition of force in Newton’s second law:

„A force is an influence on an object that causes the object to change its velocity, that is, to accelerate“: F ≈ a. (2)

From this circular definition, we obtain for mass m ≈ 1/F. If we consider the number “1“ as a unit of force, Fr = 1 (reference force), we get for the mass m = Fr /F. This is the vested definition of mass as a relationship of forces. As force is an abstract U-subset of energy F = E/s = E, when s = 1 unit, e.g. 1 m, we obtain for mass a relationship of two energies:

m = Er /E = SP(A).

We conclude:

The physical quantity mass is, per definition and method of measurement, a relationship of two energies. The gravitational energy relationship is with 1 kg which is the SI reference system with respect to earth’s gravitation that can be replaced by any other reference system. The definition of mass is equivalent to the definition of absolute time f = 1/t = E/EA = SP(A). In fact, it is a dimensionless number as is the case with all physical quantities according to their method of definition and measurement within the SI system which is mathematics (see also here).

The definition of mass follows the principle of circular argument. If we rearrange m = 1/a to ma = 1 = F = E = reference space-time (Newton’s second law), we obtain the principle of last equivalence. This elaboration of the definition of mass proves again that mathematics is the only method of definition and measurement of physical quantities.

This knowledge is basic for an understanding of various mass measurements in physics that have produced a number of fundamental natural constants. I have derived some of these constants by applying the Universal Equation as can be seen at one glance on Table 1. The definition of relativistic mass follows the same pattern. I have discussed this quantity extensively in conjunction with the traditional concept of space-time in the theory of relativity (see chapter 8.3 & equation (43) in Volume II).

The equivalence between the method of definition of physical quantities and the method of their measurement, being mathematics in both cases, can be illustrated by the measurement of weight F = E (s = 1). The measurement of weight is an assessment of gravitation as a particular energy exchange. The instruments of measurement are scales. With scales we weigh equivalent weights Fr = Fx at equilibrium; as s = 1 = constant, hence Er = Ex . This is Newton’s third law expressed as an energy law according to the axiom of conservation of action potentials (see Axiomatics).

The equilibrium of weights may be a direct comparison of two gravitational interactions with the earth, or it may be mediated through spring (elastic) forces. As all systems of space-time are U-subsets, the kind of interim force is of no importance: any particular energy exchange, such as gravitation, can be reduced to an interaction between two interacting entities (axiom of reducibility). I have reduced the entire philosophy behind the current definitions of physical laws in physics to three fundamental axioms in terms of epistemology, i.e., in terms of human cognition and with respect to the Universal Law. For further information read the new Axiomatics.

Let us now consider the simplest case when the beam of the scales is at balance. In this case, we compare the energy Er (reference weight) and Ex (object to be weighed), as they undergo equivalent gravitational interactions with the earth (equal attraction). The equivalence of the two attractions is visualized by the balance, e.g. by the horizontal position of the scale beam. This is an application of the principle of circular argument – building of equivalence and comparison, which is by the way a practical application of any mathematical equation.

Please observe that humans only employ mathematics based on mathematical equations and have no functional applied mathematics based on inequalities (≤, ≥). When these symbols are used in physics, they always lead to nonsensical conclusions, which are bluntly wrong. This is very important to know.

All physical experiments assess real space-time interactions according to the principle of circular argument. This also holds for any abstract physical quantity, with which any particular energy interaction is described. All physical quantities in physics are abstract mathematical definitions and have no real existence. There is only energy (energy exchange) in All-That-Is.

Let us now describe both interactions, the reference weight Er and the object to be weighed Ex , with the earth’s gravitation according to the axiom of reducibility. For this purpose, we express the two systems in the new space-time symbolism. The space-time of the earth EE is given as gravitational potential (long-range correlation, LRC):

EE = LRCG = UG = [2d-space-time]G.

The space-time of the two gravitational objects, Er and Ex, is given as mass (energy relationship): Er = mr = SP(A)r and Ex = mx = SP(A)x. As the two interactions are equivalent when the scales are at balance, we obtain the Universal Equation for each weighing:

E = ErEG = ExEG = SP(A)r[2d-space-time]G = SP(A)x[2d-space-time]G

We can now compare the two gravitational interactions by building a quotient within mathematics:

K = SP(A) = SP(A)x[2d-space-time]G : SP(A)r[2d-space-time]G =

= SP(A)x/SP(A)r = mx /mr = (x) kg

We obtain the Universal Law as a rule of three. One can use the same equation to obtain the absolute constants – the coefficients of vertical and horizontal energy exchange – in the new theory of the Universal Law (see Volume II). “Weighing“ is thus based on the equivalence of the earth’s gravitation for each mass measurement, i.e., UG = g = constant. If UG were to change from one measurement to another, we would not be in a position to perform any adequate weighing, precisely, we would not know what the energy relationships (masses) between distinct objects really are.

Any assessment of space-time requires, firstly, the building of equivalences (as mathematical equations) and, secondly, the comparison between two identical entities. “Identical” means that we can only compare physical quantities that are the same in terms of their mathematical definition and method of measurement but have a different value. This is the principle of circular argument as the only operational method of physics and mathematics. One can use the same principle to define a level as an abstract U-subset of space-time, consisting of equivalent systems or action potentials.

The principle of circular argument is the only cognitive principle of human consciousness (3).

Without it, the world would be incomprehensible. The above statement is a tautology – there is no possibility to distinguish between “cognition“ and “consciousness“. Such tautologies reveal the closed character of space-time – the principle of circular argument is the universal operation of the mind with respect to the primary term.

The above equation exemplifies as to how one obtains the “certain event“ which is a statistical term in physics: mr = mx = 1 kg = SP(A) = certain event = 1. If mr = SP(A) ≥ 1, the “1 object“ to be weighed is equivalent to n (kg), that is, 1 = n (n = all numbers of the continuum = ∞). Within mathematical formalism we can define arbitrarily any number of the continuum, which stands for a system of space-time, as the certain event and assign it the number “1“although it may have n elements. This mathematical procedure is fairly common in physics but has not been comprehended by all physicists in terms of philosophy of mathematics as an abstract hermeneutic discipline without any external object.

The SI unit Mole is a Dimensional Number That Pertains to Time f

We can show that the basic quantity “1 mole“ is defined in the same way. Any definition of physical units, e.g. SI units, follows this pattern. The standard energy system of 1 kg contains, for instance, 1000 g, 1 000 000 mg and so on (4). We can build an equivalence between the certain event „1“ and any other number of n, such as 1000 or 1 000 000 by adding voluntary names of units to these numbers, which stand for real space-time systems: e.g. 1 kg = 1000 gram. Thus the primary idea of space-time as conceptual equivalence is introduced in mathematics not through numbers (objects of thought), which are universal abstract signs that can be ascribed to infinite real objects, but through descriptive terms (words), such as “kilogram“, “gram“ and “milligram“. The latter are aggregates (assemblies) of n elements, whereas the elements are also arbitrarily defined within mathematics as identical by the principle of circular argument as to build this set of elements as an abstract system or level of space-time.

Because any discrimination of space-time = All-That-Is takes place first in the mind and is only then projected onto the external world where it can be validated in experiments. This holds true for any abstract physical quantity within the SI system as well as for all elementary particles in quantum mechanics which are first defined within mathematics (see Bohr’s atomic model in Volume II).

In modern esotericism this basic truth is explained in a somewhat simplistic manner by saying that humans are the creators of their reality which is All-That-Is. Every human being creates and inhabits its own universe, but then these same light workers have great difficulties to explain how these subjective realities merge /intercept with each other as to create the consensual reality of the current 3D holographic model. Obviously there is more to that and the explanation can only come from a philosophical disquisition of the foundations of mathematics and physics as this is done in the new Axiomatics and Theory of the Universal Law

Back to the terms in human language that are attributed to numbers when they assess real systems of space-time. These descriptive terms establish the link between hermeneutic mathematics and the real world. Such terms are of precise mathematical character – when we apply the principle of circular argument to the words “kilogram“ and “gram“, we obtain a dimensionless quotient: kilogram/gram = 1000 that belongs to the continuum. From this we conclude that human language can be “mathematized“ when the individual words, respectively their connotations, are axiomatically defined from the primary term by the principle of circular argument.

Instead of the voluntary units, kilogram and gram, we can choose the space-time of the Planck’s constant h as a reference unit of mass and call it the basic photon (see also Table 1):

E = h/c² = mp = SP(A) = 1

by comparing it with itself. In this case, we follow the pattern of the SI system, which uses photon space-time as a reference system for the basic units of space and time (see Part I).

We conclude:

As mass is a space-time relationship, that is, it only contains space and time, we should also use photon space-time as the initial reference system for the definition of mass and eliminate the present reference system of earth’s gravitation, given as 1 kg. Since these reference systems are transitive, we can compare the space-time of the basic photon h with the space-time of the standard SI system of mass, called 1 kg, and will obtain a different quotient or dimensionless number but the relations between the energies of the systems given as mass will remain the same (the Universal Law as a rule of three).

We can then express the mass of all material systems, for instance, the mass of all elementary particles and macroscopic gravitational objects, in relation to the mass of h in kg and obtain the same mass values as assessed by direct measurements (see Table 1). The reason, why these results agree, is that mathematics is the only method of definition and measurement of mass or any other quantity.

I assume that my readers already grasp from this and my previous publication what a profound revolution this simple suggestion brings about in present-day physics, which until now claims that “photons do not have a mass”. That is why physicists cannot account for more than 90% of the theoretically calculated mass in the universe according to their cosmological models and define it in a rather obscure esoteric manner as “dark matter”. This statement alone has reduced modern cosmology to “fake science”.

Back to mathematics – the mother-father of all science. Mathematics is a transitive axiomatic system due to the closed character of space-time – it works both ways. One can either depart from the definition of mass and then confirm it experimentally in a secondary way or assess mass as a space-time relationship of real systems and then formalize this measurement into a general definition of this quantity. In both cases, the primary event is the mathematical definition according to the principle of circular argument.

When we set E = mp = h/c² = 1 and mp = (h/c²)×1 kg, the space-time of Planck’s constant h can be chosen as the initial reference system of mass measurement. This is a consequent step based on the knowledge that space-time has only two dimensions, the initial reference frame of which is photon space-time (see Part I) All other units can be derived from these two units.